Clinical modelling around the concepts of warnings, alerts and notifications is incredibly complex and each of the terms are loaded with confusion. It is not going to be easy to navigate this area and achieve a common understanding that will underpin information models for sensible and cross paradigm decision support.

Anatomy of a Problem... a Diagnosis...

Anatomy of an Adverse Reaction

Discussion about how to represent some of the commonest clinical concepts in an electronic health record or message has been raging for years. There has been no clear consensus. One of those tricky ones - so ubiquitous that everyone wants to have an opinion - is how to create a computable specification for an adverse reaction.

In the past few years I have been involved in much research and many discussions with colleagues around the world about how to represent an adverse reaction in a detailed clinical model. I'd like to share some of these learnings...

Discussion about how to represent some of the commonest clinical concepts in an electronic health record or message has been raging for years. There has been no clear consensus. One of those tricky ones - so ubiquitous that everyone wants to have an opinion - is how to create a computable specification for an adverse reaction.

In the past few years I have been involved in much research and many discussions with colleagues around the world about how to represent an adverse reaction in a detailed clinical model. I'd like to share some of these learnings...

Making sense of 'Severity'

'Severity' is a pretty simple clinical concept, isn't it?

I thought so too until I first sat down to create a single, re-usable archetype to represent 'Severity'. I soon discovered that I had significantly underestimated it - the challenge was greater than appeared at first glance.

'Severity' is a pretty simple clinical concept, isn't it?

I thought so too until I first sat down to create a single, re-usable archetype to represent 'Severity'. I soon discovered that I had significantly underestimated it - the challenge was greater than appeared at first glance.

The archetype development process is usually relatively straight forward - a brain dump of all related information into a mind map, followed by rearranging the mind map until sensible patterns emerge. They usually do, but not this time.

However, the more I investigated and researched, the more I could see that clinicians express severity in a variety of ways, sometimes mixing and 'munging' various concepts together in such a way that humans can easily make sense of it, but it is much harder to represent in a way that is both clinically sensible and computable.

The simple trio of 'Mild', 'Moderate' and 'Severe' is commonly used as a gradation to express the severity of a condition, injury or adverse event. Occasionally these are defined for a given condition or injury, however most often these are subjective and there should be concern that one clinician's mild might be another's moderate. Some also include intermediate terms, such as 'Mild-to-moderate' and 'Moderate-to-severe', but one has to begin to be concerned if these more subtle differences make the judgement even more unreliable, especially if we need to exchange information between systems.

Others add 'Trivial' – is this less than 'Mild'? By how much? Is there a true gradation here? And what of 'Extreme'? Still others add 'Life-threatening' and 'Death' – but are these really expressing severity? Or are they expressing clinical impact or even an outcome?

When exploring severity I found examples of severity expressed by clinicians in many ways. This list is by no means exhaustive:

- Pain – expressed as intensity, often including a visual analogue scale as a means to record it ie rate from 0-10

- Burns – describing percentage of body involved e.g. >80% burns

- Burns – describing the depth of burn by degree e.g. first degree

- Perineal tears – describe the extent and damage by degree – e.g. second degree tear

- Facial tic – expressed in using frequency e.g. occasional through to unrelenting

- Cancer – expressed as a grade, clinical or pathological

- Rash – extent or percentage of a limb or torso covered.

- Minor or major

- Mild, disabling life-threatening

- Relative severity – better, worse etc

- Functional impact – ordinary activity, slight limitation, marked limitation, inability to perform any physical activity

- Short or long term persistence

- Acute or chronic

In practice, clinicians can work interchangeably with any of these expressions and make reasonable clinical sense of it. The challenge when creating archetypes, and other computable models, is to ensure that clinicians can express what they need in a health record, exchange that information safely with other clinicians, allow for knowledge-based activities such as decision support.

In addition, there also needs to be a clear distinction between 'severity' and other, related qualifiers that provide additional context about 'seriousness' of the condition, injury or adverse reaction. These sometimes get thrown into the mix as well, including:

- Clinical impact (or significance);

- Clinical treatment required; and

- Outcomes.

Clinical impact/significance might include:

- None - No clinical effect observed

- Insignificant - Little noticeable clinical effect observed.

- Significant - Obvious clinical effect observed

- Life-threatening - Life-threatening effect observed

- Death – Individual died.

Immediate clinical treatment required might include:

- No treatment

- Required clinician consultation

- Required hospitalisation

- Required Intensive Care Unit

Outcomes might include:

- Recovered/resolved

- Recovering/resolving

- Not recovered/resolved

-

Fatal

Then there are those that recovered or the condition resolved but there were other sequelae such as congenital anomalies in an unborn child...

Severity ain't simple.

Over time, and repeatedly returning to it when modelling it in various archetypes including Adverse Reaction and Problem/Diagnosis, I've come to the conclusion that I don't think it is useful or helpful for us to model 'severity' in a single, re-usable archetype. It seems to work better as a single qualifier element within archetypes – then we can bind it in to terminology value sets that are useful for that specific archetype concept and for the use-case context.

When a clinical knowledge pattern is easily identified, creating an archetype is easy - the archetype almost writes itself! So over time I've learned not to try to force the modelling. Some archetypes 'work'; some, like this one, just don't.

Clinical Knowledge Governance in a Web2.0 world

Establishing and maintaining the quality of clinical knowledge is clearly the domain of the expert clinicians themselves. This is a broadly accepted principle for management and governance of the traditional clinical knowledge artefacts. However this assumption needs re-evaluation when we need to establish quality, safety and ‘fitness for purpose’ of computable clinical knowledge artefacts that populate Electronic Health Record (EHR) systems.

Clinical knowledge has traditionally been created and shared through formal publication and peer-review processes that have been adjudicated by committees of clinical experts. Those expert committees have been appointed through a credentialing process and have had jurisdiction and oversight over the entire publishable content – ‘the buck stops here’. Before the rise of the internet, face-to-face meetings have been where most of the committee work has been done, and the process has most often been slow and expensive but delivered good quality publications. The opportunity cost to each participating clinician has been high with recurring interruptions to their clinical activities. Revision of those publications at a later date repeats this process, taking considerable time, money and resources.

Establishing and maintaining the quality of clinical knowledge is clearly the domain of the expert clinicians themselves. This is a broadly accepted principle for management and governance of the traditional clinical knowledge artefacts. However this assumption needs re-evaluation when we need to establish quality, safety and ‘fitness for purpose’ of computable clinical knowledge artefacts that populate Electronic Health Record (EHR) systems.

Clinical knowledge has traditionally been created and shared through formal publication and peer-review processes that have been adjudicated by committees of clinical experts. Those expert committees have been appointed through a credentialing process and have had jurisdiction and oversight over the entire publishable content – ‘the buck stops here’. Before the rise of the internet, face-to-face meetings have been where most of the committee work has been done, and the process has most often been slow and expensive but delivered good quality publications. The opportunity cost to each participating clinician has been high with recurring interruptions to their clinical activities. Revision of those publications at a later date repeats this process, taking considerable time, money and resources.

Certainly in recent times, there have been more electronic tools to support these processes – email, teleconferences and videoconferences have improved the logistics of the process, but essentially the process remains unchanged.

Given the increasing traction of electronic health records, there is a parallel movement to develop and share computable clinical content definitions that can be created, published and implemented by: multiple clinical disciplines; generalists and specialists; primary, secondary and tertiary care organisations; population health planning; clinical researchers; and knowledge-enabled systems such as clinical decision support applications. They need to be language independent and translatable, in order to transport health information across national boundaries.

These kind of computable clinical models need the input from many experts, clinicians and others, to ensure that they are not only clinically appropriate but support safe data usage in our EHRs. These models are increasingly being created with ambitious goals – to create once and then re-use many times. In this case, the scope of the models needs to include requirements of the full breadth of clinical professions and specialties. Clinicians remain key to their development and publication, but they also require input from:

- Other domain experts – non-clinicians who will want or need to use these same models for non-clinical purposes such as secondary data use;

- Informaticians – who understand how these models will be the basis for recording health information, exchange between systems, reporting, data aggregation and how knowledge-based activities.

- Terminologists – to ensure that the models will integrate with appropriate terminology value sets;

- Technicians – who will advise on the technical impacts of these models in systems; and

- Translators – who will ensure that the clinical information is faithfully transformed from one language to another.

Examples of these computable clinical content models are many and varied. There are open source and proprietary models of many different flavours and philosophies – archetypes, templates, detailed clinical models etc. In recent years there are increasing attempts to broaden the input to the creation of these models and even to start to standardise them – regionally, nationally and even internationally. In this new paradigm, the traditional approaches to clinical content development, management and governance are no longer sufficient.

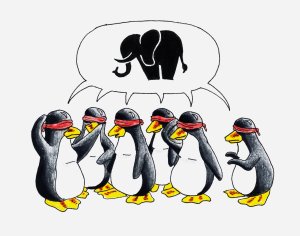

When the full breadth, depth, and dynamic nature of clinical knowledge is considered, it is not feasible to be able to appoint an overarching committee or board who would be capable of providing final ‘sign off’ about the clinical ‘correctness’ for any one model. Each clinical knowledge model will require input from varying groups of expert clinicians, terminologists, informaticians and technicians, depending on the clinical knowledge artefact under review. We need to find innovative approaches to online and asynchronous collaboration of a wide range of individuals from diverse backgrounds, expertise and geographical location to ensure these models are suitable for use in clinical systems.

Traditional standards bodies, such as ISO, CEN or HL7 have well defined and fixed processes in place for managing the lifecycle of technical standards through a formal balloting process with registered member bodies. These are definitely not suitable for managing and governing an evolving and dynamic clinical content specification library.

There has been some early work on establishing abstract archetype quality criteria by QREC and more recently, ISO TC 215 Working Group 1 has established a new work item 13972, which is establishing “Quality criteria for detailed clinical models”. However, neither of these are able to establish the quality of archetype instances for real world use.

I believe that HL7 is working to establish a Template Repository. As I understand it, it will operate as an indexing service to templates that will be stored on distributed servers. Others may be able to provide more details.

Other work is no doubt occurring, of which I am not aware. And of course, each clinical system has to establish the clinical content that it will use in its own proprietary information model. In the US alone, with thousands of clinical software vendors, this means that we have thousands of different computable versions of essentially identical clinical content, but none of it interchangeable without mappings or transformation – what a huge waste of resources! We need to change this blinkered way of thinking.

The openEHR Clinical Knowledge Manager (CKM) is the only online clinical knowledge resource, to my knowledge, which is supporting collaboration by clinicians, other domain experts, informaticians, technicians and translators to achieve consensus about quality and safety in clinical content models – in this instance, openEHR archetypes. I am directly involved in the development of this tool, and am active as an Editor facilitating the review process of the archetypes – I have described it in previous blog posts.

While CKM is one of the early Web2.0 approaches to collaborating about clinical content models, I am sure there will be more over time. I have spoken to a number of Knowledge Management experts, and to my surprise no-one has yet been able to point me to similar tools, resources establishing quality within a Web2.0 environment. Are we really such pioneers? Surely there are similar approaches in other knowledge domains?

No matter. There is no doubt that we are only in the early stages of a transformation in clinical knowledge governance and we have a lot to learn about how to establish quality criteria in a Web2.0 environment. I’ll post some thoughts in my next post...

Waving in eHealth

I’m excited and optimistic about Google Wave.

In my mind, its key strength is as a brilliant hybrid medium for complex, small group conversations:

I’m excited and optimistic about Google Wave.

In my mind, its key strength is as a brilliant hybrid medium for complex, small group conversations:

- allowing tightly focused, tree-like threads, through contextual inline replies;

- synchronous & asynchronous collaboration, wherever useful or most appropriate; and

- inclusion of shared resource files.

So, Google Wave in eHealth - how could it be used?

A few thoughts...

1. Health Conversations

- For private use...

For Patient to Patient or Clinician to Clinician conversations, Wave is a great way for individuals to share thoughts and information on any topic, health included, and no matter what the personal or professional purpose. However if the topic IS health, then there also should be a caveat that the Wave doesn’t contain any private health information. It is not unreasonable to assume that sharing health information in Wave is similar to that of using insecure emails – so just don’t do it!

- For use in healthcare provision...

As a vehicle for a dialogue between Clinician and Patient, Wave is great but it is important to keep in mind that this is not just your average chat, but another format of an online consultation, and all the complications that this brings. If Wave is embedded in an appropriately secure environment, such as an existing EHR/PHR platform with appropriate privacy provisions/authorizations etc. and where versioning of the Wave could be recorded to support the medico-legal record, then Wave could be a great tool in eHealth. Remember that this is a preview and it is a new technology, so there will be hiccups as we all learn to use Wave - there is a significant overhead to using Wave effectively.

One of my first thoughts re the potential clinical use of Wave was how it could have enhanced a Personal Health Record (PHR) that was developed for use by older children and teenagers with Insulin Dependent Diabetes at Royal Children’s Hospital, Melbourne – BetterDiabetes.  There is a component within this PHR where the teens can request online assistance and advice from their Diabetes Nurse Educators (DNEs) for management of their diabetes. Armed with appropriate authorization and access permissions, the DNEs can view selected parts of the BetterDiabetes record, including glucose measurements uploaded only minutes beforehand, making informed and making real-time responses back to the teens regarding proposed changes to their care.

In the online version of BetterDiabetes the secure messages flowing back and forth are similar to email, but embedded in the PHR – asynchronous, fragmented and clunky. If this was able to be transcended by a Wave-like tool for communication it could be a very useful vehicle for collaborative healthcare provision. The provision of timely information flow in both directions, and including addition of external files to the ‘Wave’ could be extremely valuable.

There is a component within this PHR where the teens can request online assistance and advice from their Diabetes Nurse Educators (DNEs) for management of their diabetes. Armed with appropriate authorization and access permissions, the DNEs can view selected parts of the BetterDiabetes record, including glucose measurements uploaded only minutes beforehand, making informed and making real-time responses back to the teens regarding proposed changes to their care.

In the online version of BetterDiabetes the secure messages flowing back and forth are similar to email, but embedded in the PHR – asynchronous, fragmented and clunky. If this was able to be transcended by a Wave-like tool for communication it could be a very useful vehicle for collaborative healthcare provision. The provision of timely information flow in both directions, and including addition of external files to the ‘Wave’ could be extremely valuable.

2. EHRs & EMRs

Wave is NOT appropriate for an EHR/EMR platform. Formal health records should be based on standards such as ISO 18308 – ‘Requirements for an Electronic Health Record Reference Architecture’ and ISO/DTR 20514 – ‘Electronic Health Record Definition, Scope and Context’. Now Wave may be very useful as an interface for communications within that EHR framework, form or structure, but it is definitely not the basis for "…a set of clinical and technical requirements for a record architecture that supports using, sharing, and exchanging electronic health records across different health sectors, different countries, and different models of healthcare delivery." Of some concern, there are some public Waves that are promoting Google Wave as the newest medium for EMRs. One public Wave as an example is: Electronic Medical Records (EMR) and Medical Information Systems: Is Wave the future of electronic medical records? which includes an EMR example.

By all means let's embed the innovative Wave interface for use within a formal EHR/EMR but we need to be careful if we are expecting more from it.

3. Clinical Decision Support

Phil Baumann’s ‘A Clinical Infusion of Google Wave’ blog, featuring Clinybot, is a fascinating, futuristic view of Clinical Decision Support provided for clinicians. Phil states that he assumes all privacy and security aspects are OK when proposing Clinybot - agreed. However, the missing ingredient in Phil’s proposal is not unique to Clinybot but the reason why we have so little Clinical Decision Support in practice. In order for Clinybot to function as described it would have to have a clear semantic handle on the data structure underlying it. Clinical Decision Support can be and is developed on a per EHR/EMR basis, however standardization of clinical content would enable universal applicability of Clinybot across all EHRs and EMRs. The combination of Clinybot and standardised content could be a very powerful potential partnership.

_____

I’m a pragmatist; definitely not a futurist. I’ve seen some predictions and anticipated uses for Wave that I think are very optimistic, maybe even a little far-fetched. Perhaps these things might happen... probably not.

And of course there are issues and drawbacks to Wave. Tech Crunch's Why Google Wave Sucks, And Why You Will Use It Anyway is a pretty good heads up to the reality of Wave at present.

For the moment I'm more than happy to explore how the benefits of the complex, small group conversations can be leveraged in healthcare, and particularly my clinical modeling work with openEHR. I will keep an open mind to see how Waving in health develops.