Are you planning to participate when the Personally Controlled Health Record goes live in July 2012?

I've certainly been pondering what would make me interested to try it, in the first instance, and then to keep using it in an ongoing manner – in my roles as both a Clinician and as a Consumer.

In my previous blog post we can see the PCEHR positioned part way between a fully independent Personal Health Record and the Clinician's Electronic Health Record – a hybrid product bridging both domains and requiring an approach that cleverly manages the shared responsibility and mixed governance model.

The scope of the proposed PCEHR is outlined in the Concept of Operations document. A recent presentation [PDF] given at the HIC2011conference provides a summary of expected functionality.

Recently we've all seen many analyses about the demise of Google Health and over the past decade we've been able to see the difficulties faced by many Personal Health Records who have come and gone in the past decade or even longer. What have we learned? What are the takeaway messages? How can we leverage this momentum in a way that is positive for both consumers and clinicians and potentially transform the delivery of healthcare?

Let's make some general assumptions:

- Core functionality is likely to remain largely as described in the ConOps document;

- Privacy, Security and Authorisation will be dealt with appropriately; and

- Internet skills of users will be taken into account in terms of clever and intelligent design and workflow.

In a previous post, I suggested that there are additional 3 concepts that need serious consideration if we are to be successful in the development of person-centric health records, such as the PCEHR.

- Health is personal

- Health is social

- Need for liquid data

I've been pondering further and have modified these slightly. I want to explore at how we can make health data personally valuable, socially-connected and dynamic or liquid.

Adding value; making it personal

Take a look at any clinician's EHR and the actual data in itself is pretty static and uninteresting – proprietary data structures, HL7v2 messages, CDA documents; facts, evidence, assessments, plans; a sequence of temporal entries which could prove to be overwhelming when gathered over a lifetime. However it provides the necessary foundation on which innovative approaches could make that static data come become dynamic, to be re-used, leveraged for other purposes, to flow!

Data starts to come to life when we use it creatively to add value or to personalise it, rather than just presenting the raw facts and figures or the interminable lists. The key is to identify the 'hot buttons' for any and all users, clinician or consumer alike – those things that provide some value or personalisation benefit that is not otherwise available.

For the clinician to be engaged, they need to be able identify at least symmetrical, and preferably increased, value from any effort that they contribute to participating in the creation and maintenance of a shared electronic health record (EHR). This might be recognised in many ways including, but not limited to:

Yet this is clearly not without it's unique challenges - for example, JAHIMA's The Problems with Problem Lists! What is being proposed is not trivial at all!

Consider what will engage a patient to take more than a second look at their health record. Google Health creator, Adam Bosworth, in the short excerpt from the TechCrunch video on why Google Health failed, stated that people don't just want some place to store their health information – that they want something more. And Ross Koppel noted in his Google gave up on electronic personal health records, but we shouldn't blog post that "…while knowledge may be power, it isn't willpower." The challenge we face is how to add 'the something more'; to encourage the development of willpower.

Segmenting consumers is one approach to identifying 'hot buttons' that might trigger them to opt in and use the PCEHR:

- Well & Healthy – this is a tricky group to engage as they often see no reason to address any aspects of their health and are not motivated to change behaviour or seek treatment. Some may be interested in preventive health or tracking fitness or diet.;

- Worried Well – those who consider themselves at risk of illness and want to ensure that they are (or will be) OK, often tracking aspects of their health to be alert for issues or problems – some call them hypochondriacs;

- Chronically Ill – those living with an ongoing health condition or disability; and

- Acutely Ill – including recent life-changing diagnosis or event

Each group will have specific needs that need to be explored to ensure that despite registering, there is a persuasive reason for them to return and actively engage with the PCEHR content or value-added features.

While the 'hot buttons' for consumers might vary, there are common principles that could underpin any data made available and value-added services provided, such that consumers can use, and make sense of, their health information. Examples include:

Socially-connected data?

Hmmm. Traditionally we tend to gravitate to the idea of privacy at all costs when it comes to health information, however what we really aspire to is making the right information available to the right person at the right time – the safe and secure facilitation of health information communication. That this might extend into the non-clinical space in some circumstances is challenging and somewhat contentious, especially in these relatively early days, but it is conceivable that our traditional, rather paternalistic health paradigm is about to undergo a considerable shakeup.

The area of Telehealth is certainly starting to gain traction between healthcare providers as an electronic proxy for transporting patients long distances for specialist care. Similarly there appears to be rising interest in online consultations and interactions between consumers and their healthcare providers including the re-ordering of prescriptions, requesting of repeat referrals and ability to ask questions via secure messaging. Clearly there are issues and constraints about these activities, but it is very likely that the incidence of consumer-clinician interactions will increase in the near future as consumers recognise the convenience and demand rises.

A person-centric health record that allows shared access from both providers and consumers can act as the hub for these communications. In addition, integration with the Clinician's information system can support the ability for notification to the consumer of availability of diagnostic test results, reminders, tests due, prescriptions due etc.

The social support imperative in health programs such as Quit Smoking and Weight loss have been well recognised for many years, and is a common strategy used in clinical practice. In recent times this is expanding beyond family and friends to the broader community via automated sharing of measurements to social networks such as Twitter. In addition we can consider some early wins in the non-medical sphere – for example, the online PatientsLikeMe community – where consumers are engaging with other consumers with similar issues and concerns. There is also some interesting research evolving about the value that is coming from consumer to consumer health advice – Managing the Personal Side of Health: How Patient Expertise Differs from Expertise of Clinicians. Contagion Health has developed the Get Up and Move program where consumers issue challenges each other to encourage increased activity and to promote exercise, facilitated by an online website, and Twitter.

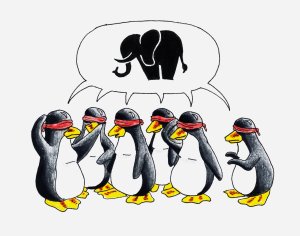

A recent Pew Research Centre report, Peer-to-peer Healthcare stated: "If you enable an environment in which people can share, they will!" And Adam Bosworth made a clear statement that one reason Google Health failed was because it 'wasn't social' – that it was effectively an isolated silo of a consumer's health information. I suspect that we will see this social and connected aspect of healthcare evolving and become more prominent in coming years, as we understand the risks, benefits and potential.

Liquid or Dynamic Data?

One of the most interesting comments about the demise of Google Health was: "Google Health confronted a tower of healthcare information Babel. If health information could have been shared, Google would still be offering that service." That's a very strong statement – not only that the actual data itself was a significant issue, and more specifically, that it was not shareable.

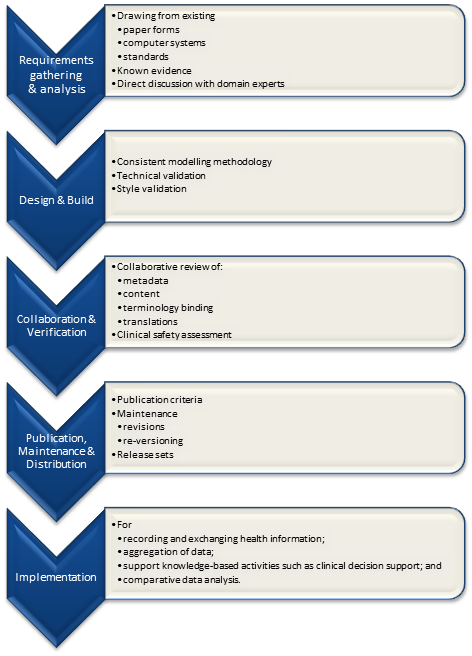

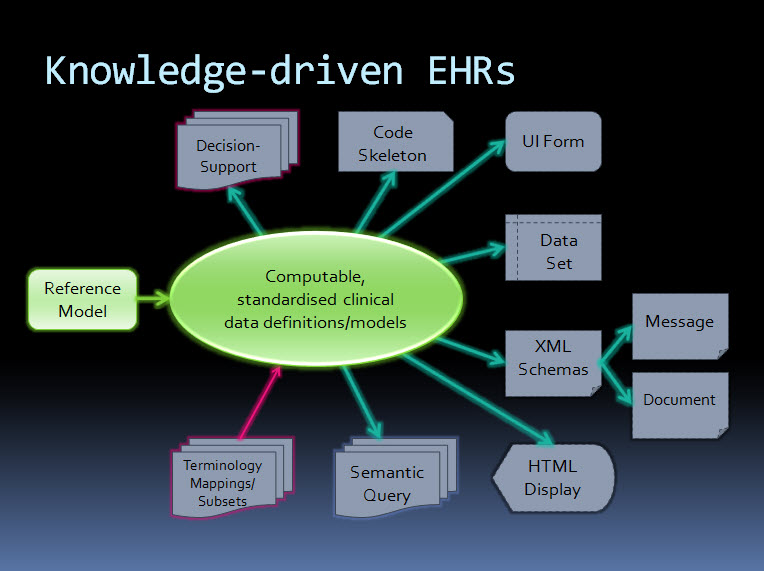

So that requires us to ask how we can make data shareable. "Gimme my damn data" and "Let the data flow", some say - but the result is usually access to their own data in various proprietary formats but no opportunity to bring it together into one cohesive whole which can be used and leveraged to support their healthcare. I've blogged extensively already about the benefits of standardised, computable clinical content patterns known as archetypes – see What on earth is openEHR and Glide Path to Interoperability. To me this approach is simply common sense, and with the rising interest in development of Detailed Clinical Models, perhaps this view is coming closer to reality.

Until we create a lingua franca of health information we can't expect to be able to share health information accurately and unambiguously. We need agreed common clinical content definitions to support sharing of health data such that it can flow, be re-used and be used for additional purposes such as sharing information between applications – making the data dynamic.

As we work out how to approach this shared personal electronic record space, our options will be severely limited unless we have a coherent approach to the data structure and data entry. We need to:

- Develop common data formats and rules for use that will support the flow of health information

- Promote automatic entry and integration of data from multiple source systems – primary care EHR, Laboratory systems, government resources and, increasingly, consumer PHRs. Most will tolerate some manual entry of data if they receive some value in return, but don't underestimate the barrier to entry for both clinicians and consumers if they have to enter the same data in multiple places.

To participate, or not?

The core content of the PCEHR as per the ConOps is very clinician-oriented at present, clearly intended for the ultimate benefit of the patient, but focused on information that will be useful to share where multiple clinicians are participating in care or for use in an emergency. The nominated Primary Care clinician will be tasked with the upload and maintenance of the Shared Health Summary. Event Summaries can be uploaded (hopefully this will be automated earlier rather than later). PBS, MBS, ACIR and Organ Donor information will be integrated from Medicare sources.

All of this data will no doubt be extremely useful in some circumstances, but in itself may not be compelling enough to encourage frequent use by clinicians, nor provide them with they symmetrical value such they will need to maintain the Health Summary records. From an academic point of view the proposed ConOps is ticking all the correct boxes, however the big question is will that be enough to motivate clinicians to participate and then, more importantly, to stay involved?

Consumer engagement as described in the ConOps is fairly limited – ability to manually enter 'key information' about allergies and adverse reactions or medications; the location of an advance care directive and notes about health information that they wish to share. This is hardly enough to capture the imagination of many consumers. It is a real likelihood that those who do opt in may only use it once or twice, including manually entering some of their data, but find that there is not compelling reasons for them to return to their PCEHR record nor to encourage/demand that their Clinician participate.

We have an opportunity to turn the PCEHR into a dynamic person-centred health information hub – one that can be leveraged by clinicians and consumers, and for the benefit of the consumer's health and wellbeing in the most wholistic sense. In order to do so, the PCEHR needs to seriously consider prioritising an approach that ensures dynamic, personalised and/or value-added data; health information that can be shared and communicated clinically and socially; and dynamic data.

At all costs we must avoid:

- a dry, impersonal tool that is ignored or infrequently used by any user, or used by only one type, rather than both consumers and clinicians in partnership;

- barriers to entry for both consumers and clinicians;

- a closed, inward-looking tool;

- a silo of isolated health information operating as yet another 'tower of healthcare information Babel'.

This is our challenge!

We need to change our approach and preserve the integrity of our health data at all costs. After all it is the only reason why we record any facts or activity in an electronic health record - so we can use the data for direct patient care; share & exchange the data; aggregate and analyse the data, and use the data as the basis for clinical decision support.

We need to change our approach and preserve the integrity of our health data at all costs. After all it is the only reason why we record any facts or activity in an electronic health record - so we can use the data for direct patient care; share & exchange the data; aggregate and analyse the data, and use the data as the basis for clinical decision support.Adapted from Martin van der Meer, 2009